Big data analysis

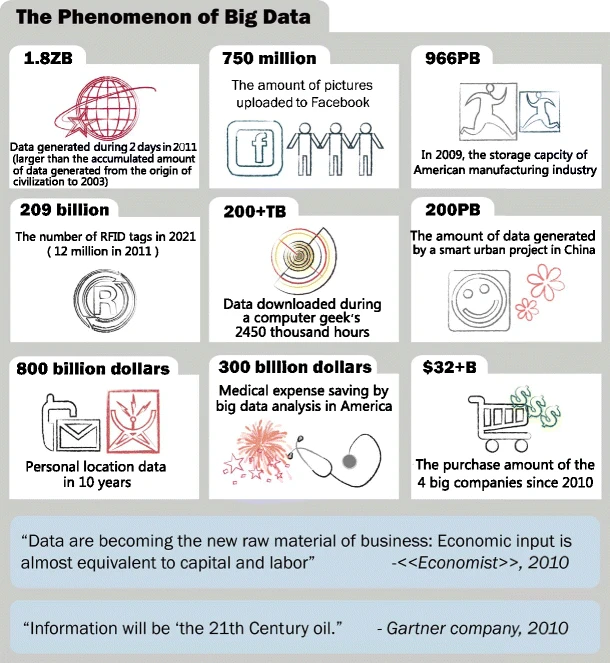

Big data presents opportunities for analysts to uncover new knowledge and gain new insights into real-world problems. However, its massive scale and complexity presents computational and statistical challenges. These include scalability issues, storage constraints, noise accumulation, spurious correlations, incidental endogeneity and measurement errors. In Figure 1, Chen, Mao, and Liu (2014) review the size of big data for different sectors of business. Addressing these challenges demands innovative approaches in both computation and statistics. Traditional methods, effective for small and moderate sample sizes, often falter when confronted with massive datasets. Thus, there is a pressing need for innovative statistical methodologies and computational tools tailored to the unique demands of big data analysis.

Computational solutions for big data analysis

Computer engineers often seek more powerful computing facilities to reduce computing time, leading to the rapid development of supercomputers over the past decade. These supercomputers boast speeds and storage capacities hundreds or even thousands of times greater than those of general-purpose PCs. However, significant energy consumption and limited accessibility remain major drawbacks. While cloud computing offers a partial solution by providing accessible computing resources, it faces challenges related to data transfer inefficiency, privacy and security concerns. Graphic Processing Units (GPUs) have emerged as another computational facility, offering powerful parallel computing capabilities. However, recent comparisons have shown that even high-end GPUs can be outperformed by general-purpose multi-core processors, primarily due to data transfer inefficiencies. In summary, neither supercomputers, cloud computing, nor GPUs have efficiently solved the big data problem. Instead, there is a growing need for efficient statistical solutions that can make big data manageable on general-purpose PCs.

Statistical solutions for big data analysis

In the realm of addressing the challenges posed by big data, statistical solutions are relatively novel compared to engineering solutions, with new methodologies continually under development. Currently available methods can be broadly categorized into three groups:

- Sampling: This involves selecting a representative subset of the data for analysis instead of analysing the entire dataset. This approach can significantly reduce computational requirements while still providing valuable insights into the underlying population.

- Divide and conquer: This approach involves breaking down the large problem into smaller, more manageable sub problems. Each sub problem is then independently analysed, often in parallel, before combining the results to obtain the final output.

- Online updating of streamed data: The statistical inference is updated as new data arrive sequentially.

In recent years, there has been a growing preference for sampling over divide and recombine methods in addressing a range of regression problems. Meanwhile, online updating is primarily utilized for streaming data. Furthermore, when a large dataset is unnecessary to confidently answer a specific question, sampling is often favoured, as it allows for analysis using standard methods.

Sampling algorithms for big data

The literature presents two strategies to resolve the primary challenge of how to acquire an informative subset that efficiently addresses specific analytical questions to yield results consistent with analysing the large data set.

They are:

- Sample randomly from the large dataset using subsampling probabilities determined via an assumed statistical model and objective (e.g., prediction and/or parameter estimation) (Wang, Zhu, and Ma 2018; Yao and Wang 2019; Ai, Wang, et al. 2021; Ai, Yu, et al. 2021; Lee, Schifano, and Wang 2021, 2022; Zhang, Ning, and Ruppert 2021)

- Select samples based on an experimental design (Drovandi et al. 2017; Wang, Yang, and Stufken 2019; Cheng, Wang, and Yang 2020; Hou-Liu and Browne 2023; Reuter and Schwabe 2023; Yu, Liu, and Wang 2023).

As of now in this package we focus on the subsampling methods

- Leverage sampling by Ma, Mahoney, and Yu (2014) and Ma and Sun (2015).

- Local case control sampling by Fithian and Hastie (2015).

- A- and L-optimality based subsampling methods for Generalised Linear Models by Wang, Zhu, and Ma (2018) and Ai, Yu, et al. (2021).

- A-optimality based subsampling for Gaussian Linear Model by Lee, Schifano, and Wang (2021).

- A- and L-optimality based subsampling methods for Generalised Linear Models under response not involved in probability calculation by Zhang, Ning, and Ruppert (2021).

- A- and L-optimality based model robust/average subsampling methods for Generalised Linear Models by Mahendran, Thompson, and McGree (2023).

- Subsampling for Generalised Linear Models under potential model misspecification as by Mahendran, Thompson, and McGree (2025), Adewale and Wiens (2009) and Adewale and Xu (2010).